We demonstrate the need and potential of systematically integrated vision and semantics solutions for visual sensemaking in the backdrop of autonomous driving. Keywords: Cognitive Vision, Deep Semantics, Declarative Spatial Reasoning, Knowledge Representation and Reasoning, Commonsense Reasoning, Visual Abduction, Answer Set Programming, Autonomous Driving, Human-Centred Computing and Design, Standardisation in Driving Technology, Spatial Cognition and AI. The developed neurosymbolic framework is domain-independent, with the case of autonomous driving designed to serve as an exemplar for online visual sensemaking in diverse cognitive interaction settings in the backdrop of select human-centred AI technology design considerations.

As use-case, we focus on the significance of human-centred visual sensemaking - e.g., involving semantic representation and explainability, question-answering, commonsense interpolation - in safety-critical autonomous driving situations. We evaluate and demonstrate with community established benchmarks KITTIMOD, MOT-2017, and MOT-2020. The method integrates state of the art in visual computing, and is developed as a modular framework that is generally usable within hybrid architectures for realtime perception and control. A general neurosymbolic method for online visual sensemaking using answer set programming (ASP) is systematically formalised and fully implemented. Our findings are that the ASP4BIM-based prototype supports a range of novel query services for formally analysing the impacts of crowds on pedestrians that are logically derived through the use of qualitative deductive rules, that complements other powerful crowd analysis approaches such as agent-based simulation. We evaluate our prototype on the Urban Sciences Building at Newcastle University, a large, state-of-the-art living laboratory and multipurpose academic building.

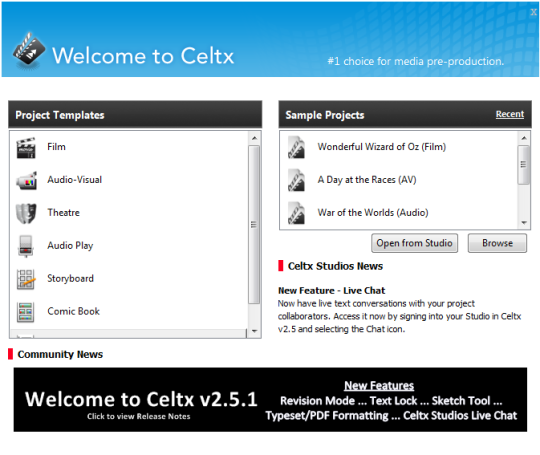

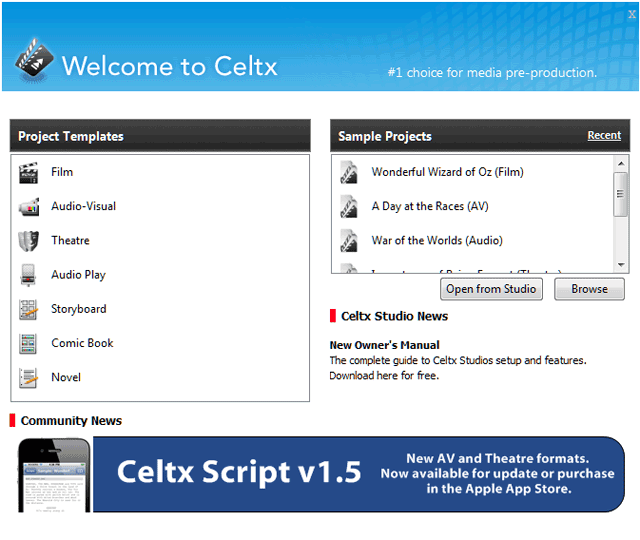

#Celtx free download software#

As a first proof of concept of our approach, we have implemented a prototype crowd analysis software tool in our new system ASP4BIM, developed specifically to support architectural design reasoning in the context of public-facing buildings with complex signage systems and diverse intended user groups. In particular, we focus on the co-presence of different user groups and the resulting impact on perceptual and functional affordances of spatial layouts by utilising the concept of spatial artefacts.

We present a framework and prototype software tool for logically reasoning about occupant perception and behaviour in the context of dynamic aspects of buildings in operation, based on qualitative deductive rules. A building occupant’s experiences are not passive responses to environmental stimuli, but are the results of multifaceted, prolonged interactions between people and space.

0 kommentar(er)

0 kommentar(er)